Researchers say that small language models are new anger

Original version It appeared in Quanta Magazine.

Large language models work well because they are very large. The latest models of Openai, Meta and Deepseek use hundreds of billions of “adjustable handles that determine the relationship between data and are used during the training process. With more parameters, models are better able to identify patterns and fittings that in turn make them more powerful and accurate.

But this power comes at a cost. The training of a model with hundreds of billions of parameters has huge computational resources. For example, Google has spent $ 191 million to teach its Gemini 1.0 Ultra. Large language models (LLMS) every time they respond to a request require significant computational power, which makes them infamous energy wolves. According to the Electrical Research Institute, a single query to ChatGPT consumes about 10 times more than a Google search.

In response, some researchers are now thinking little. IBM, Google, Microsoft and Openai have released all small language models (SLM) that use several billion parameters – part of their LLM counterparts.

Small models are not used as public tools such as their older cousin. But they can be superior to specific and narrower tasks such as summary of conversation, answering patient questions as a chat daddy’s health care and gathering data on smart devices. “For many things, a 8 billion parameter model is actually very good,” said Zico Kolter, a computer scientist at the University of Carnegie Melon. They can also run on a laptop or mobile instead of a huge data center. (There is no consensus on the precise definition of “small”, but the new models all maximize about 10 billion parameters.)

Researchers use a few tricks to optimize the training process for these small models. Large models often scratch raw training data from the Internet, which can be organized, dirty and processed. But these large models can produce a high quality dataset that can be used to teach a small model. This approach, namely the distillation of knowledge, acquires a larger pattern to effectively provide his or her education, such as a teacher who teaches the student. “Reason [SLMs] Be very good with such small models, and such data is that they use high quality data instead of dirty things. “

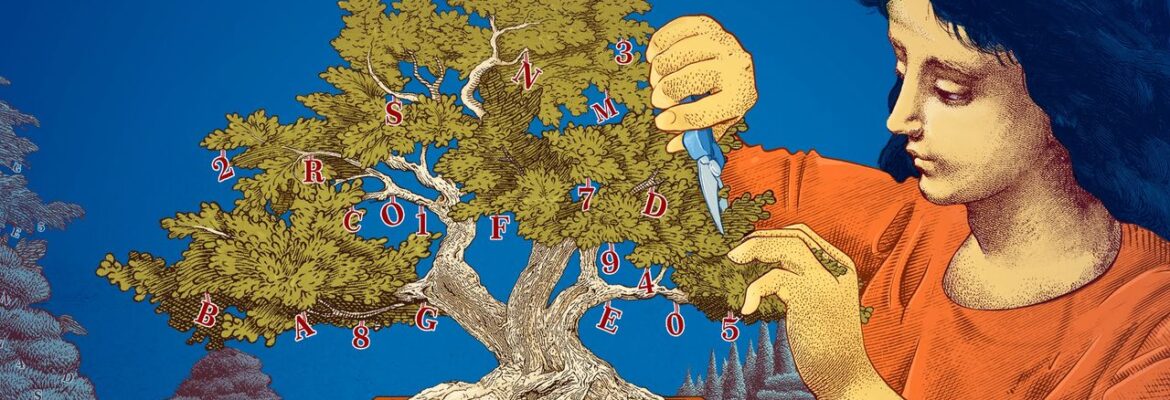

Researchers have also examined the ways to create small models by starting with large models and trimming. One method, known as pruning, requires the removal of the unnecessary or inefficient parts of a neural network – a wide network of connected data points that are the basis of a large model.

Pruning inspired by a neural network in real life, the human brain, which achieves efficiency by observing the relationship between synapses as an individual. Today’s pruning approaches go back to an article in 1989 in which Ian Lecon’s computer scientist now argues in Meta that up to 90 % of the parameters in a trained neural network can be eliminated without sacrificing efficiency. He called this method “optimal brain damage”. Pruning can help researchers adjust a small tongue model for a particular task or environment.

For researchers interested in how to do what they do, smaller models offer a cheap way to test new ideas. And since they have fewer parameters than large models, their argument may be clearer. “If you want to build a new model, you have to try things,” said Leshem Choshen, a research scientist at Mit-Ibm Watson AI. “Small models allow researchers to test with low stock.”

Large and expensive models, with their growing parameters, will be useful for programs such as public chat, image generator and drug discovery. But for many users, a small, targeted model will also work well, while researchers are easier to train and build. “These efficient models can save money, time and calculate,” Chooshan said.

The main story Printed with permission from Quanta Magazine, An independent publishing in terms of editorial Simmons Foundation Their mission is to strengthen the general understanding of science by covering research developments and mathematics and physical sciences and life.